Product launch - Triumphs and Tribulations

Recently we launched a consumer based web-app on a high-traffic channel. It was a 4-day, staying up late till 3-am nerve-wracking experience, with highs and lows.

More so that try to analyze what went wrong, and what went well, I would like to focus more on the journey, the emotions and try to share with everyone, what it feels like launching a product.

We were tasked to build the web-app two months ago, on a “moving target” basis. That means, there was an end goal, but it wasn’t clear what steps had to be taken to get there, due to ever-changing requirements - the goals change every day, sometimes twice a day.

The high level architecture looks like this - there are three separate systems:

- System A, a 3rd party Salesforce app

- System B, a cloud platform that provides login systems, event loggings and analytics

- System C, a consumer facing web app

We were tasked to build C, and connect them to A and B.

So we started out the development, breezing through the first two months with moderate stress (which includes the daily milestone changes). Everyone thought the product looked good, but it wasn’t tested against real users yet.

3 days before launch, we started getting concerns about the stability of all 3 systems. If any one system goes down, it means a big service disruption to end users. We started thinking about full-on stress tests and how to simulate a real user load on the front page.

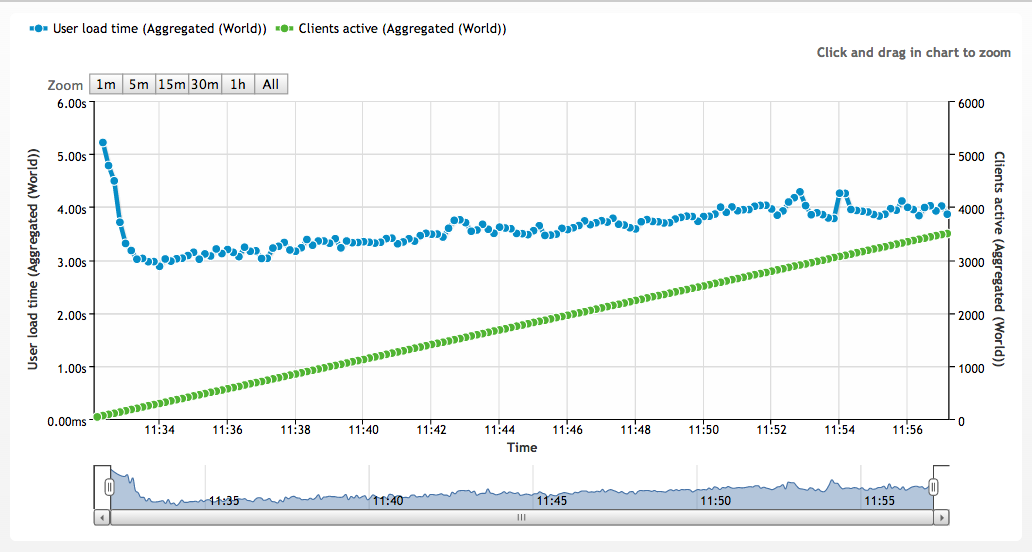

I cracked up a simple load test, hammering our web-app with 5000 requests per second for 36 minutes. It was the most expensive load test I ever had to make.

(Whew), the results looked good. We had a failure rate of about 0.02%, a negligable amount. But the loading time still wasn’t good enough. We had to start embarking on using an ajax-based loading system, which promised to cut the loading time by 50%. The thing is, there was no time to complete the ajax loading system by launch day, because we had other issues to handle: more requirements we just piling up on launch day itself.

Come launch day, we went ahead without ajax loading, and it turned out ok. However, a bigger issue occured: there was some miscommunication about a certain mechanic for System C. It caused an overflow of free items given out to end users. About 900 were able to acquire some real items for free within the first 10 minutes of launch. We ended up taking some heat and monetary loss. Ouch.

Day 2 was all disaster management, the first 8 hours. We had to build custom patch, and root out the end users who were deemed worthy of the real items. A lot of customer support work :(

On the 2nd half of Day 2, we scrambled to fix the overflow issue. First test looked promising, but we couldn’t plug the patch into the live version yet, until the next evening. In the meantime, we had to fix other logic issues in the end-consumer templates.

Day 3 of launch, the overflow still managed to happen within the first 10 seconds of a mini-product going live. We figured out that simultaneous writes to the database caused contentions. The server couldn’t take writes of more than 5 per second. We spent the next 8 hours building a sharding counter for our systems, to ensure more efficient writes. 3 am woes. Plus there was more logic errors in the end-user templates (causing some users to view unwanted UI elements). Mini victory: we finally managed to complete the ajax loading system in time for a midnight patch.

Day 4 looked more promising. We had a item come up at 6pm, with 100 people being able to acquire it. Within 2 minutes, it was gone: with zero overflow. Yay! But wait, yesterday’s UI fixes didn’t show. Help! Luckily we found a simple fix on the backend, and the issue was solved within 5 minutes. What a scare!

At the end of Day 4, everyone was weary from the product launch. Because everything finally stabilized (quasi), the team finally tasted some success, and it was fulfilling.

I let everyone leave by 8pm. We’ll see what happens tomorrow (fingers crossed).

Life